Ok so a gram of DNA can store 455 exabytes ~= 4E21 bits. That is quite respectable but lets compare it to the initialization requirements of the QA-grid:

- 1 bit per 3D voxel

- length of side of voxel = Planck length = 1.6E-35 meters

- length of side of grid = 2000 light-years

-> number of voxels per side of grid = 1.17E56

-> total number of voxels = 1.6E168 which is also the number of bits needed to store the initial state.

The mass of DNA needed to store 1.6E168 bits = 4E146 grams, which is about 2E113 solar masses. Note that the estimated number of particles in this universe is 1E82 so even if you're trying to assign just one particle per each needed solar-massed DNA-storage unit you are going to run out of this universe about 31 orders of magnitude before the system is complete. I would hazard a guess that this kind of QA-system will never be built

http://www.universet...n-the-universe/

Indeed I thought I was sticking my neck out on DNA storage! It is one route but no way exhaustive.

Indeed I thought I was sticking my neck out on DNA storage! It is one route but no way exhaustive.

We should co-write aLongecity/Kurzweil forums book on this as you seem to understand science! I wondered whether books would be obsolete but even more are published.

I dont think storage need be used like that. It's simpler to do like that of course, but even now there's too much data to use without A.I.'s, and my idea is to inflate it where you needs it. You obviously cant look at the whole universe at once even in the limited detail we have (the Illustris Simulation)

You just need variables and grid references. You inflate the bits you need. For resurrecting people you dont need the whole universe just coordinates.

So you store the math as recopies for the components you need, and draw them into detailed configurations.

Inflating uses components which are identical.

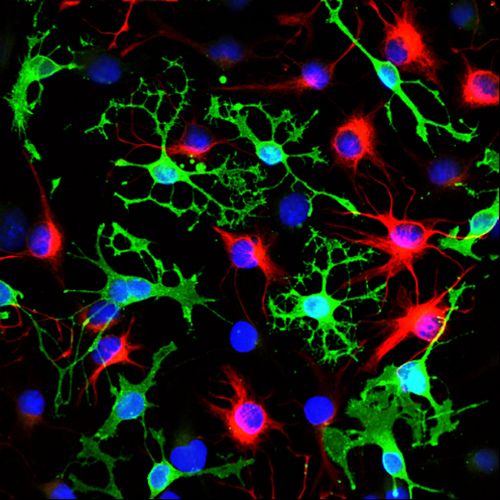

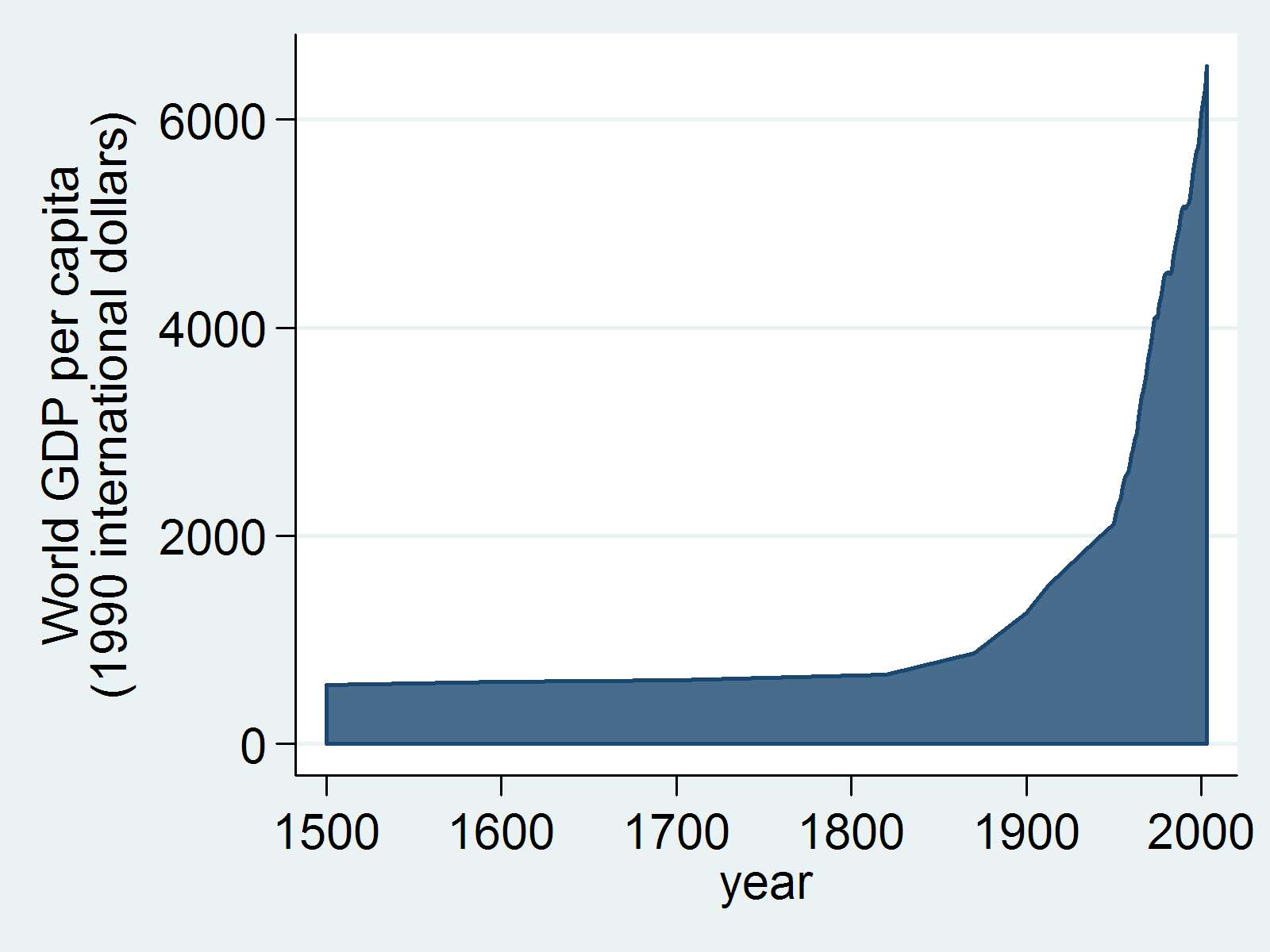

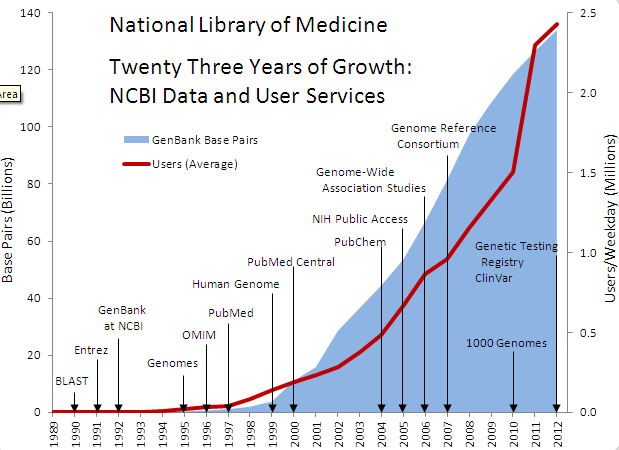

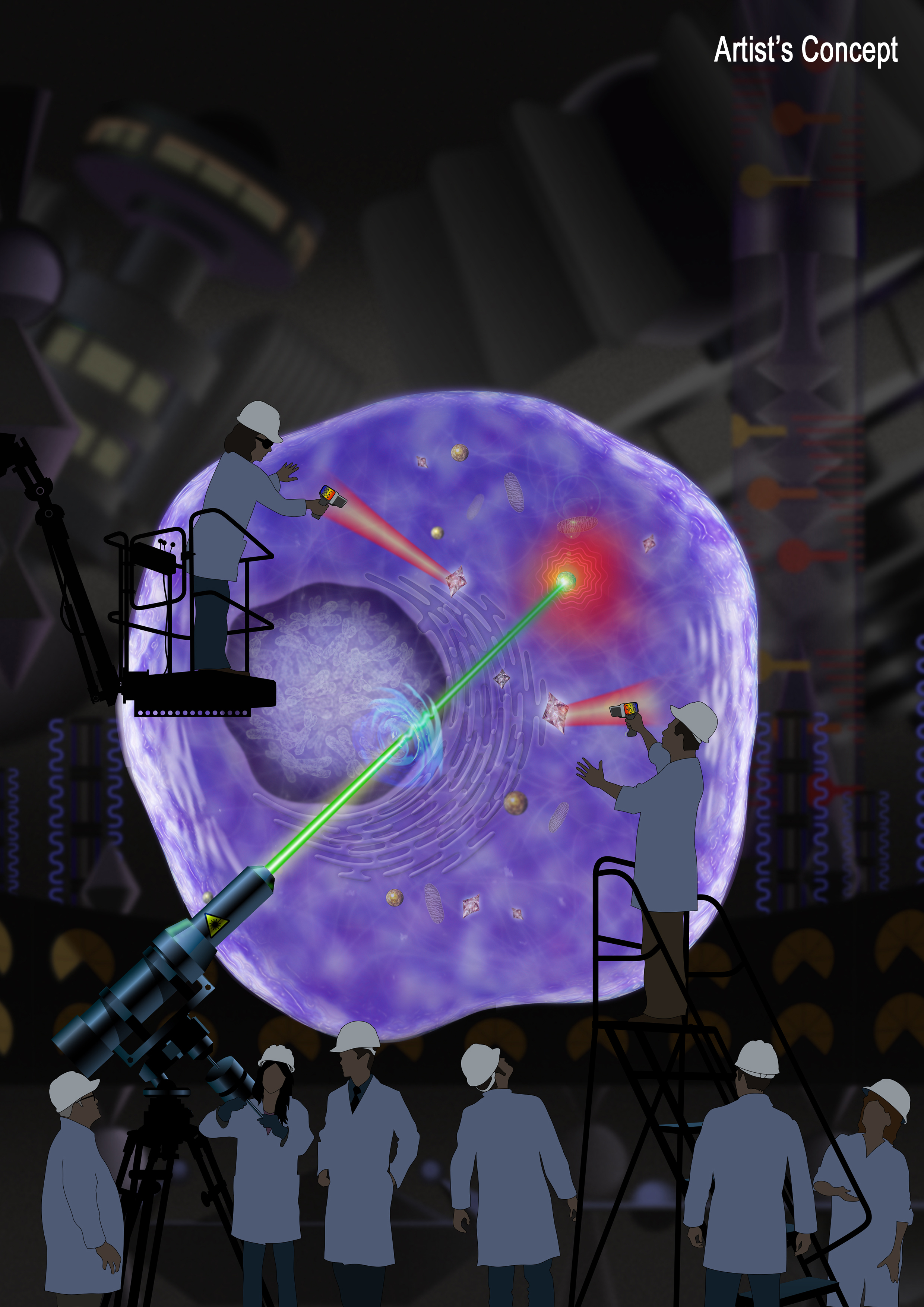

Simulations of the present and past universe are already being built and you can see the trend how many moving part is increasing

Illustris Simulation

"A total of 19 million CPU hours was required, using 8,192 CPU cores.[1] The peak memory usage was approximately 25 TB of RAM.[1] A total of 136 snapshots were saved over the course of the simulation, totaling over 230 TB cumulative data volume.[2]"

Although this is miles away from your figures, it is possible to store by inflatables.

When we want to draft a prototype Neanderthal (later to personalise to the exact dead person) we reach into the lattice of components and inflate them. This is presently done by people but machines are coming that will do it zillions of times faster.

"Using 50,000-year-old fossils from France and a computer synthesizer, McCarthy’s team has generated a recording of how a Neanderthal would pronounce the letter “e.” (Click here to listen to McCarthy's simulation of a Neanderthal voice.)

http://www.fau.edu/e...08-04speaks.php

This topic is locked

This topic is locked